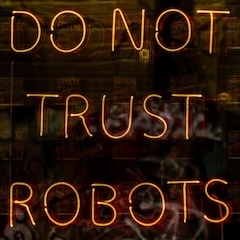

Do I have your attention yet?

Threat

Prompt Injection Attacks represent one of the most pervasive threats. These attacks manipulate AI systems through malicious inputs, potentially leading to unauthorized access, data leakage, or system compromise. The evolving sophistication of these attacks makes detection difficult.

AI Agent Vulnerabilities pose particularly severe risks as autonomous AI systems become more prevalent. Agentic systems are especially vulnerable to tool misuse, goal manipulation, and identity spoofing.

Generative AI Data Leakage has emerged as a critical concern. Employee prompts to AI tools can contain sensitive data, including customer information, employee PII , and legal/financial details. The problem is exacerbated by "shadow AI" usage, where unauthorized AI system usage is seen as a major risk.

Mitigation

Validation Techniques that assess prompts against predefined rules or a catalog of acceptable formats are being investigated. These techniques aim to establish guardrails for AI agent usage while preserving creative freedom.

Know Your Agent capabilities are being integrated into identity and access management systems, but the governance processes for these systems are lacking

Prompt Auditing and Control is another form of validation that occurs before the prompt is sent to the agent. Attempting to audit the AI’s output after it has been generated is akin to locking the barn after the horse has escaped.

These are only a few of the issues artificial intelligence has raised. Research continues into the threat landscape, technical solutions to mitigate the threats, and the creation of governance and policy to control this rapidly emerging technology.